Hypervisor code quality control

23 June 2021

I started developing the PulseDbg hypervisor some time around 2013. Back then I made a decision to use MSVC as a compiler. It was because it had a comfortable IDE with cool plugins for refactoring, native PE format support which would be used for the EFI loader, and so on. To be fair, I was involved in a bare-metal development project with GCC, makefiles, shell-scripts, and all this stuff. But since I was purely a windows guy by that time, I couldn't get used to the linux toolchain.

I'd love to think that it was a weighted decision, but anyway, here I am using MSVC for my hypervisor for more than 8 years. I've learned a lot about compiler specifics during this time. And I've transitioned from Visual Studio 2012 to 2019. The last time I did that was just a couple of weeks ago (June 2021). And even though I succeeded, I can't say it was smooth enough.

The reason for my pain is that I can't fully control code generation for my project. I actually had to develop a routine for checking the optimized code quality in a built binary using one of your favorite disassemblers. And this is something I'd like to share with you today.

The Release build of PulseDbg host is optimized in favor of speed in my settings (/O2). It makes sense, since my goal is to minimize the time spent in VMX Root mode, because you know, VMX exits happen all the time, so I need to handle them very quickly so the virtualized system would not be laggy. By the way, I exclude all of the security checks for the same exact reason. This might be very cocky for a former security researcher, but I really can't sacrifice CPU cycles for that. Since it's a bare metal executable, I also can't use any runtime libraries, exception handling using __try / __except and so on. I just don't have the execution environment, because I am the execution environment!

Another way of cutting corners is to avoid using XMM registers in the code. I just don't want to waste execution time on saving and restoring those on every VM exit. And here is the catch - my executable has to be 64-bit for UEFI to support it. But at the same time Windows x64 uses XMM registers as a part of its ABI. I can't solve this problem with anything else but finding ways for the compiler not to generate such code. This is the most common pain when moving to a newer version of Visual Studio. New compilers are always getting smarter and do a better job of vectorizing code. I appreciate that optimization, but unfortunately I can't use it.

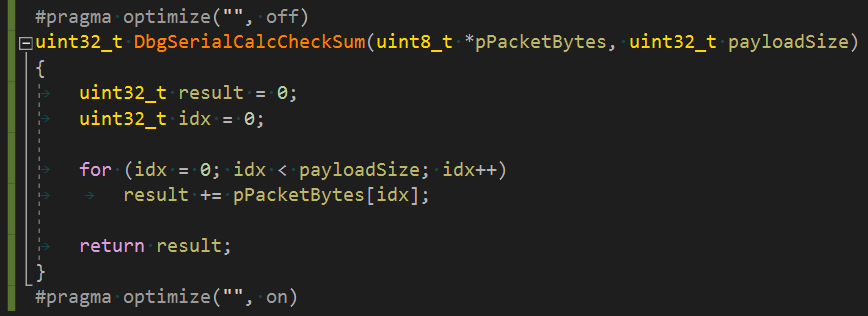

Anyway, let's talk about the latest case of upgrading the project to Visual Studio 2019. Firstly, the code wouldn't even build in Release build. The new compiler was so smart that it found a way of optimizing the code by substituting it with an intirinsic function. I couldn't even fix it with /Oi-. All I could do was add my intrinsic implementation under #pragma optimize("", off). It would disable optimizations for my function and then I would just call it directly from the optimized code.

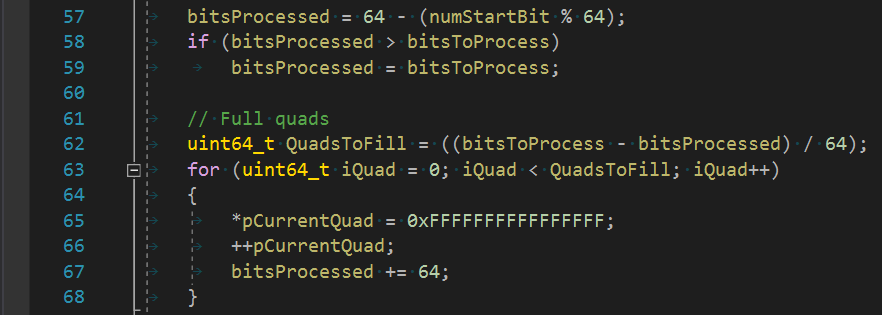

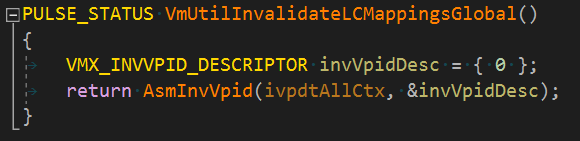

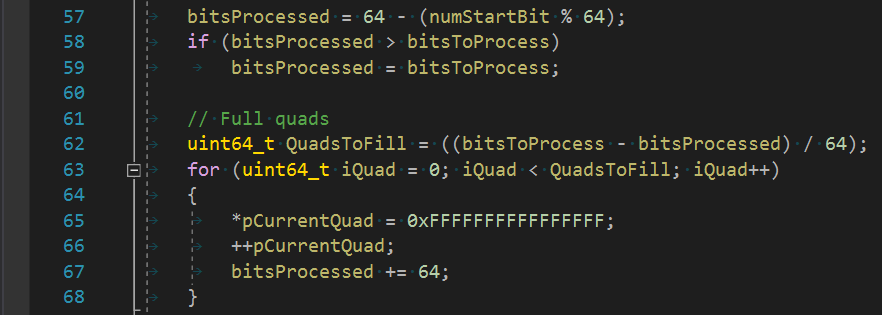

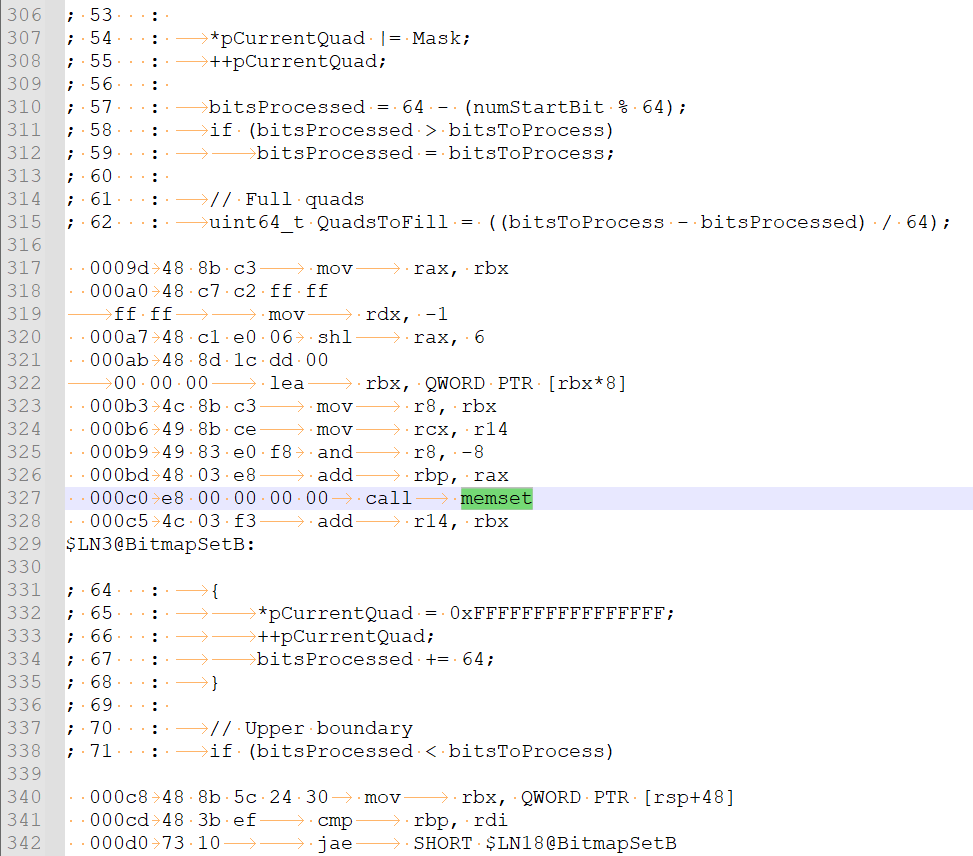

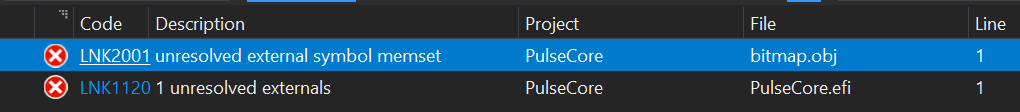

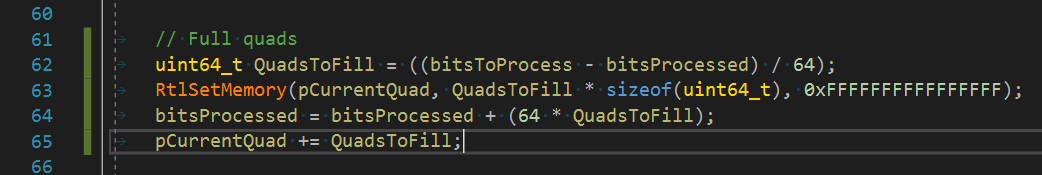

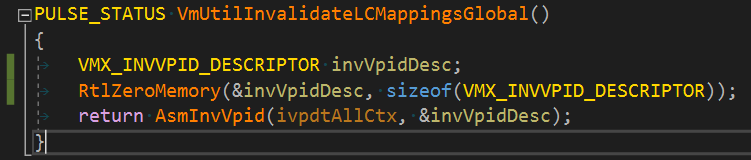

It's the source where the compiler found a memset optimization and generated the following:

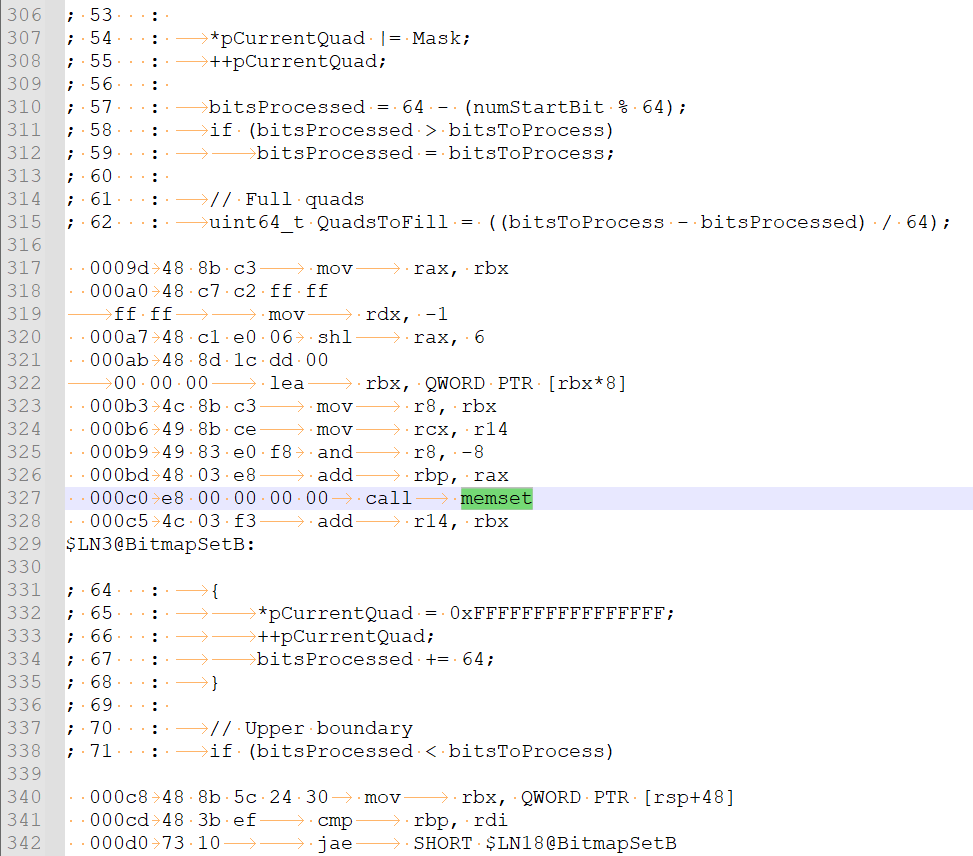

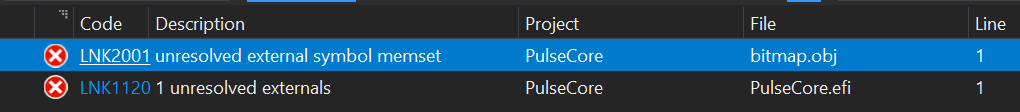

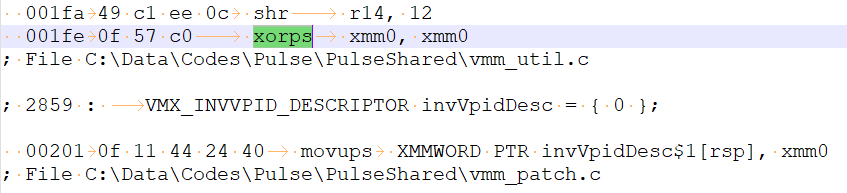

So it would give the following result:

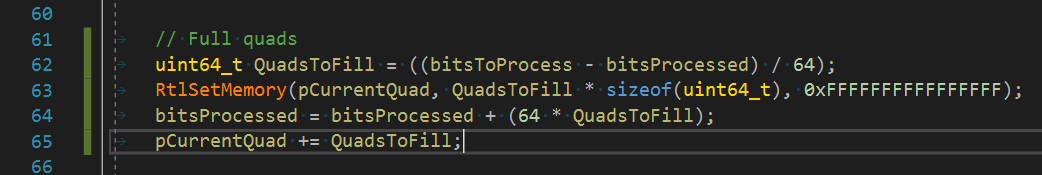

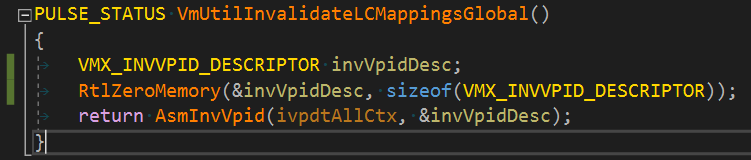

The fix would be:

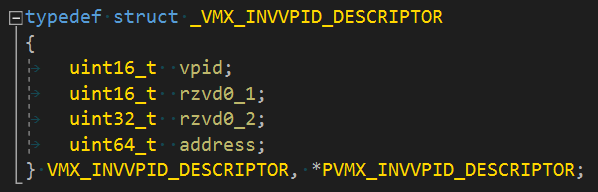

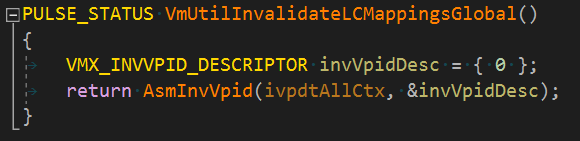

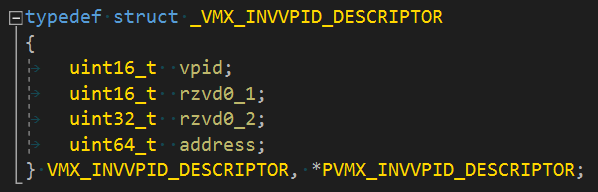

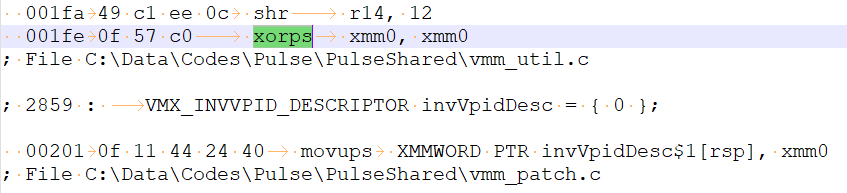

After I fixed that everything was built without any errors. But that doesn't mean the binary is OK. An extra review is needed using a disassembler. What I do is look up any 'xmm' string occurence in the disassembly. This time I found compiler improvements in different places. First, it was initializers. It makes sense to initialize 16 byte structures with one xorps, right? Yes, but still I need to fix it by removing the { 0 } piece and leaving the structure uninitialized. Sometimes you get lucky when the structure is initialized on its first use. When not so lucky, use the equivalent function accordingly.

Initialized with one instruction:

And fixed like this:

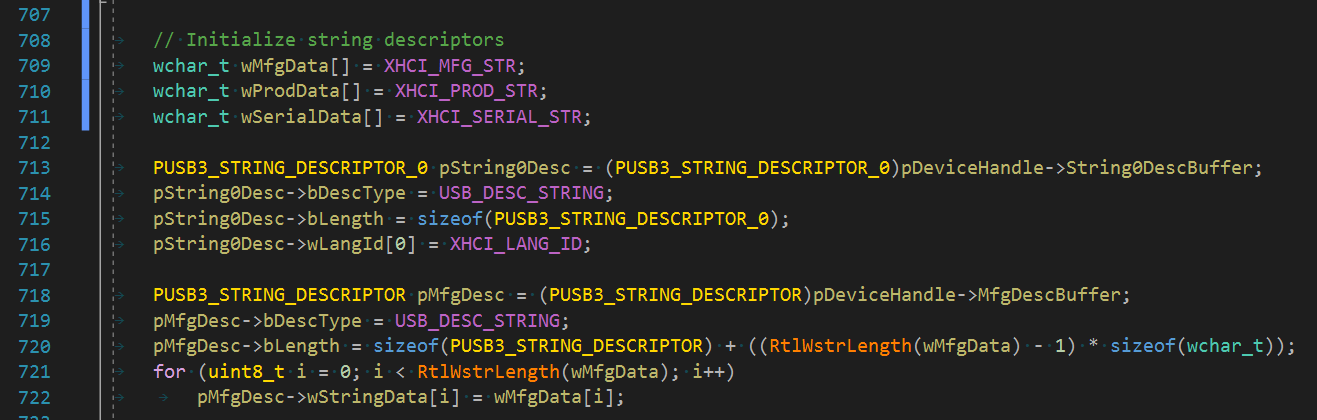

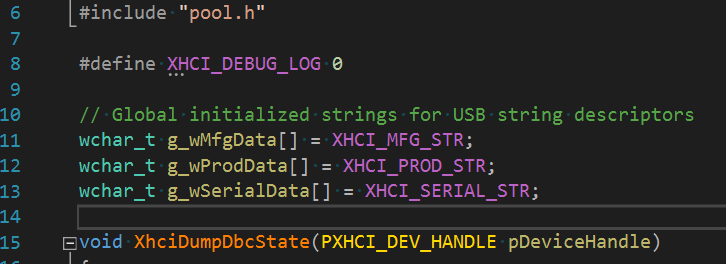

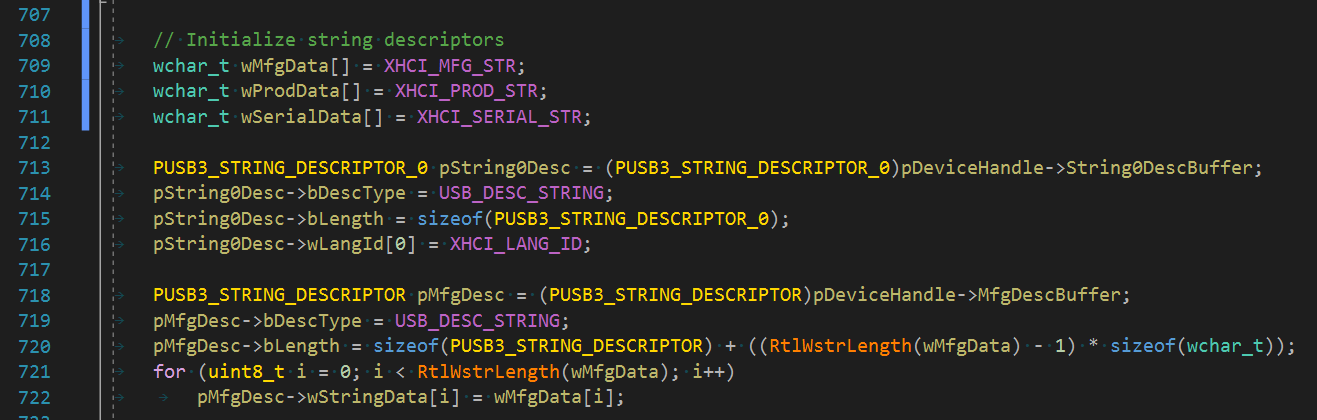

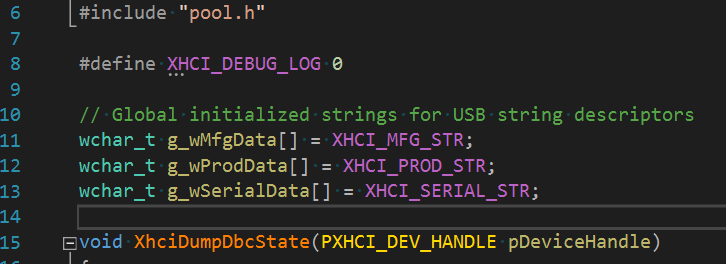

The other one would be string initialization. I realized that I initialize a stack variable with a literal through #define. Of course it can't be easily fixed. It makes more sense to make a global initialized variable in this case and then using an unoptimized version of a string copy function.

Should be fixed like this:

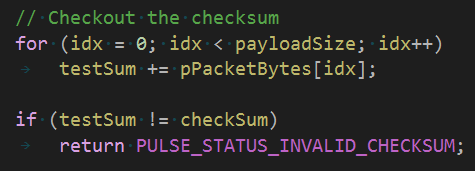

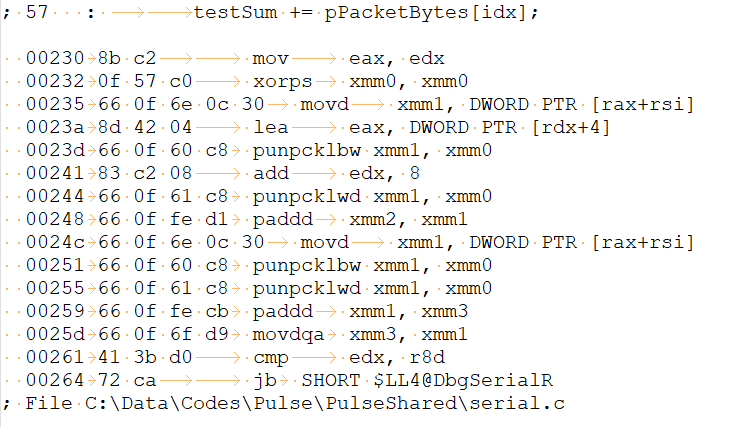

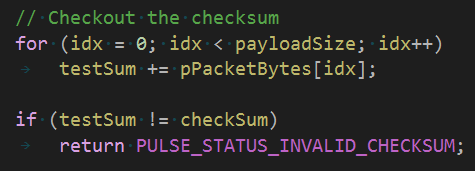

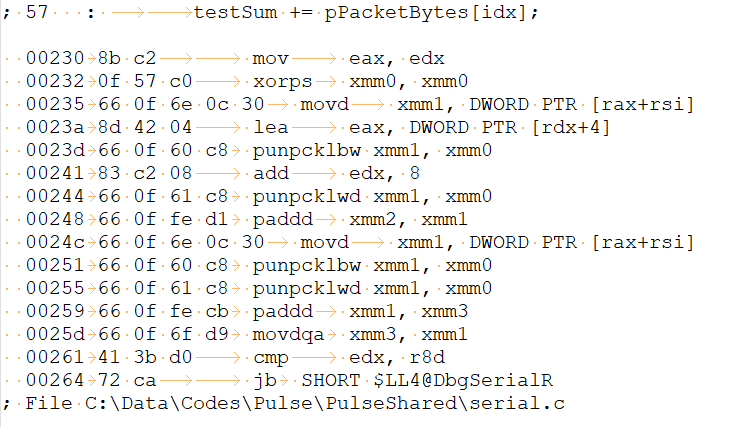

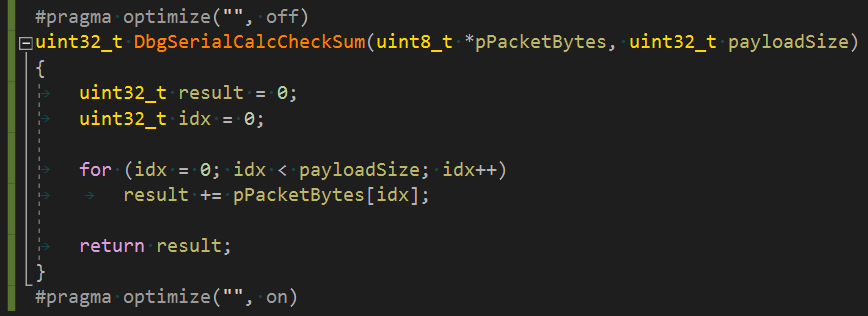

The last example was the toughest one. The compiler identified and vectorized the code used for checksum calculation. It is really clever! It is also something I can't fix. All I can do is to create a separate unoptimized function for checksum calculation. And that's what I did.

Brilliantly turns into this:

And fixed like this:

I have one more cool lifehack for the case when you forget to declare the function prototype in the compilation unit. It is not a big deal since the linker would find a proper symbol to link with. But in this case it would default the prototype to 'int f()' something. I had stupid cases when the function was supposed to return a 64-bit value, but only the lower part was returned because of that the default prototype. So I learned a quick way of detecting that: just search for 'cdqe' instruction occurences in the disassembly. If it goes right after a call instruction, there is a good chance that it was default-linked.

In the early stages of development I had really nasty cases of stack corruption due to stack home address space optimizations, NULL-pointer writes which were hard to detect, because the physical zero page is always there, UEFI firmware bugs etc. I'm happy the bugs are now very casual and easier to debug, since a lot of work has been already put into a working hypervisor. I never said I don't have bugs. I do have them, but I just don't know those yet.

Happy debugging, guys!

Huge thanks to Jared Candelaria for the edits and Igor Chervatyuk for the feedback.